@@ -237,4 +237,4 @@ Want to see how it works? Check out the video below:

!!! info "What's next?"

1. See [SSH fleets](../../docs/concepts/fleets.md#ssh-fleets)

2. Read about [dev environments](../../docs/concepts/dev-environments.md), [tasks](../../docs/concepts/tasks.md), and [services](../../docs/concepts/services.md)

- 3. Join [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd)

+ 3. Join [Discord](https://discord.gg/u8SmfwPpMd)

diff --git a/docs/blog/posts/benchmark-amd-containers-and-partitions.md b/docs/blog/posts/benchmark-amd-containers-and-partitions.md

index cf1d8baaa..8b945aaba 100644

--- a/docs/blog/posts/benchmark-amd-containers-and-partitions.md

+++ b/docs/blog/posts/benchmark-amd-containers-and-partitions.md

@@ -16,7 +16,7 @@ Our new benchmark explores two important areas for optimizing AI workloads on AM

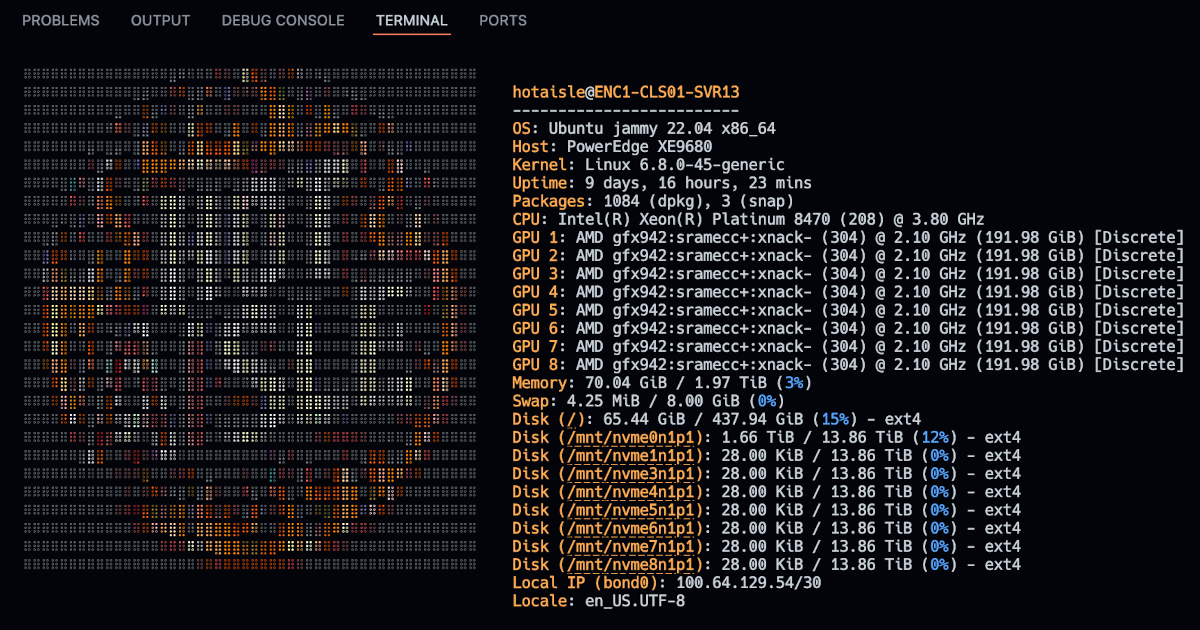

-This benchmark was supported by [Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"},

+This benchmark was supported by [Hot Aisle](https://hotaisle.xyz/),

a provider of AMD GPU bare-metal and VM infrastructure.

## Benchmark 1: Bare-metal vs containers

@@ -56,11 +56,11 @@ Our experiments consistently demonstrate that running multi-node AI workloads in

## Benchmark 2: Partition performance isolated vs mesh

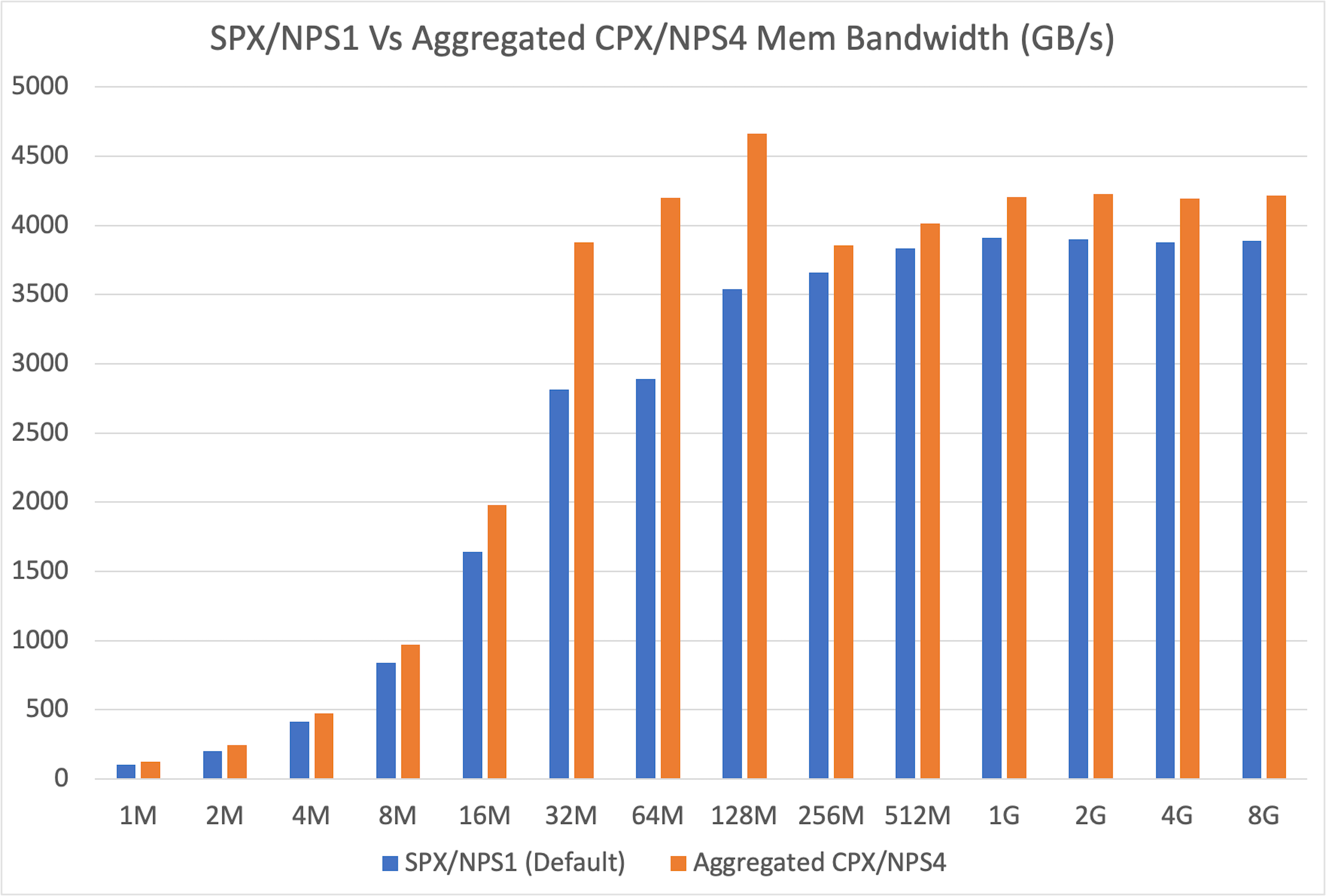

-The AMD GPU can be [partitioned :material-arrow-top-right-thin:{ .external }](https://instinct.docs.amd.com/projects/amdgpu-docs/en/latest/gpu-partitioning/mi300x/overview.html){:target="_blank"} into smaller, independent units (e.g., NPS4 mode splits one GPU into four partitions). This promises better memory bandwidth utilization. Does this theoretical gain translate to better performance in practice?

+The AMD GPU can be [partitioned](https://instinct.docs.amd.com/projects/amdgpu-docs/en/latest/gpu-partitioning/mi300x/overview.html) into smaller, independent units (e.g., NPS4 mode splits one GPU into four partitions). This promises better memory bandwidth utilization. Does this theoretical gain translate to better performance in practice?

### Finding 1: Higher performance for isolated partitions

-First, we sought to reproduce and extend findings from the [official ROCm blog :material-arrow-top-right-thin:{ .external }](https://rocm.blogs.amd.com/software-tools-optimization/compute-memory-modes/README.html){:target="_blank"}. We benchmarked the memory bandwidth of a single partition (in CPX/NPS4 mode) against a full, unpartitioned GPU (in SPX/NPS1 mode).

+First, we sought to reproduce and extend findings from the [official ROCm blog](https://rocm.blogs.amd.com/software-tools-optimization/compute-memory-modes/README.html). We benchmarked the memory bandwidth of a single partition (in CPX/NPS4 mode) against a full, unpartitioned GPU (in SPX/NPS1 mode).

@@ -237,4 +237,4 @@ Want to see how it works? Check out the video below:

!!! info "What's next?"

1. See [SSH fleets](../../docs/concepts/fleets.md#ssh-fleets)

2. Read about [dev environments](../../docs/concepts/dev-environments.md), [tasks](../../docs/concepts/tasks.md), and [services](../../docs/concepts/services.md)

- 3. Join [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd)

+ 3. Join [Discord](https://discord.gg/u8SmfwPpMd)

diff --git a/docs/blog/posts/benchmark-amd-containers-and-partitions.md b/docs/blog/posts/benchmark-amd-containers-and-partitions.md

index cf1d8baaa..8b945aaba 100644

--- a/docs/blog/posts/benchmark-amd-containers-and-partitions.md

+++ b/docs/blog/posts/benchmark-amd-containers-and-partitions.md

@@ -16,7 +16,7 @@ Our new benchmark explores two important areas for optimizing AI workloads on AM

-This benchmark was supported by [Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"},

+This benchmark was supported by [Hot Aisle](https://hotaisle.xyz/),

a provider of AMD GPU bare-metal and VM infrastructure.

## Benchmark 1: Bare-metal vs containers

@@ -56,11 +56,11 @@ Our experiments consistently demonstrate that running multi-node AI workloads in

## Benchmark 2: Partition performance isolated vs mesh

-The AMD GPU can be [partitioned :material-arrow-top-right-thin:{ .external }](https://instinct.docs.amd.com/projects/amdgpu-docs/en/latest/gpu-partitioning/mi300x/overview.html){:target="_blank"} into smaller, independent units (e.g., NPS4 mode splits one GPU into four partitions). This promises better memory bandwidth utilization. Does this theoretical gain translate to better performance in practice?

+The AMD GPU can be [partitioned](https://instinct.docs.amd.com/projects/amdgpu-docs/en/latest/gpu-partitioning/mi300x/overview.html) into smaller, independent units (e.g., NPS4 mode splits one GPU into four partitions). This promises better memory bandwidth utilization. Does this theoretical gain translate to better performance in practice?

### Finding 1: Higher performance for isolated partitions

-First, we sought to reproduce and extend findings from the [official ROCm blog :material-arrow-top-right-thin:{ .external }](https://rocm.blogs.amd.com/software-tools-optimization/compute-memory-modes/README.html){:target="_blank"}. We benchmarked the memory bandwidth of a single partition (in CPX/NPS4 mode) against a full, unpartitioned GPU (in SPX/NPS1 mode).

+First, we sought to reproduce and extend findings from the [official ROCm blog](https://rocm.blogs.amd.com/software-tools-optimization/compute-memory-modes/README.html). We benchmarked the memory bandwidth of a single partition (in CPX/NPS4 mode) against a full, unpartitioned GPU (in SPX/NPS1 mode).

@@ -100,7 +100,7 @@ GPU partitioning is only practical if used dynamically—for instance, to run mu

#### Limitations

1. **Reproducibility**: AMD’s original blog post on partitioning lacked detailed setup information, so we had to reconstruct the benchmarks independently.

-2. **Network tuning**: These benchmarks were run on a default, out-of-the-box network configuration. Our results for RCCL (~339 GB/s) and RDMA (~726 Gbps) are slightly below the peak figures [reported by Dell :material-arrow-top-right-thin:{ .external }](https://infohub.delltechnologies.com/en-us/l/generative-ai-in-the-enterprise-with-amd-accelerators/rccl-and-perftest-for-cluster-validation-1/4/){:target="_blank"}. This suggests that further performance could be unlocked with expert tuning of network topology, MTU size, and NCCL environment variables.

+2. **Network tuning**: These benchmarks were run on a default, out-of-the-box network configuration. Our results for RCCL (~339 GB/s) and RDMA (~726 Gbps) are slightly below the peak figures [reported by Dell](https://infohub.delltechnologies.com/en-us/l/generative-ai-in-the-enterprise-with-amd-accelerators/rccl-and-perftest-for-cluster-validation-1/4/). This suggests that further performance could be unlocked with expert tuning of network topology, MTU size, and NCCL environment variables.

## Benchmark setup

@@ -352,7 +352,7 @@ The `SIZE` value is `1M`, `2M`, .., `8G`.

**vLLM data parallel**

-1. Build nginx container (see [vLLM-nginx :material-arrow-top-right-thin:{ .external }](https://docs.vllm.ai/en/stable/deployment/nginx.html#build-nginx-container){:target="_blank"}).

+1. Build nginx container (see [vLLM-nginx](https://docs.vllm.ai/en/stable/deployment/nginx.html#build-nginx-container)).

2. Create `nginx.conf`

@@ -471,13 +471,13 @@ HIP_VISIBLE_DEVICES=0 python3 toy_inference_benchmark.py \

## Source code

-All source code and findings are available in [our GitHub repo :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/benchmarks/tree/main/amd/baremetal_container_partition){:target="_blank"}.

+All source code and findings are available in [our GitHub repo](https://github.com/dstackai/benchmarks/tree/main/amd/baremetal_container_partition).

## References

-* [AMD Instinct MI300X GPU partitioning overview :material-arrow-top-right-thin:{ .external }](https://instinct.docs.amd.com/projects/amdgpu-docs/en/latest/gpu-partitioning/mi300x/overview.html){:target="_blank"}

-* [Deep dive into partition modes by AMD :material-arrow-top-right-thin:{ .external }](https://rocm.blogs.amd.com/software-tools-optimization/compute-memory-modes/README.html){:target="_blank"}.

-* [RCCL and PerfTest for cluster validation by Dell :material-arrow-top-right-thin:{ .external }](https://infohub.delltechnologies.com/en-us/l/generative-ai-in-the-enterprise-with-amd-accelerators/rccl-and-perftest-for-cluster-validation-1/4/){:target="_blank"}.

+* [AMD Instinct MI300X GPU partitioning overview](https://instinct.docs.amd.com/projects/amdgpu-docs/en/latest/gpu-partitioning/mi300x/overview.html)

+* [Deep dive into partition modes by AMD](https://rocm.blogs.amd.com/software-tools-optimization/compute-memory-modes/README.html).

+* [RCCL and PerfTest for cluster validation by Dell](https://infohub.delltechnologies.com/en-us/l/generative-ai-in-the-enterprise-with-amd-accelerators/rccl-and-perftest-for-cluster-validation-1/4/).

## What's next?

@@ -487,5 +487,5 @@ Benchmark the performance impact of VMs vs bare-metal for inference and training

#### Hot Aisle

-Big thanks to [Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"} for providing the compute power behind these benchmarks.

+Big thanks to [Hot Aisle](https://hotaisle.xyz/) for providing the compute power behind these benchmarks.

If you’re looking for fast AMD GPU bare-metal or VM instances, they’re definitely worth checking out.

diff --git a/docs/blog/posts/benchmark-amd-vms.md b/docs/blog/posts/benchmark-amd-vms.md

index 099fee9be..b8d9105d0 100644

--- a/docs/blog/posts/benchmark-amd-vms.md

+++ b/docs/blog/posts/benchmark-amd-vms.md

@@ -18,7 +18,7 @@ This is the first in our series of benchmarks exploring the performance of AMD G

Our findings reveal that for single-GPU LLM training and inference, both setups deliver comparable performance. The subtle differences we observed highlight how virtualization overhead can influence performance under specific conditions, but for most practical purposes, the performance is nearly identical.

-This benchmark was supported by [Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"},

+This benchmark was supported by [Hot Aisle](https://hotaisle.xyz/),

a provider of AMD GPU bare-metal and VM infrastructure.

## Benchmark 1: Inference

@@ -201,11 +201,11 @@ python3 trl/scripts/sft.py \

## Source code

-All source code and findings are available in our [GitHub repo :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/benchmarks/tree/main/amd/single_gpu_vm_vs_bare-metal){:target="_blank"}.

+All source code and findings are available in our [GitHub repo](https://github.com/dstackai/benchmarks/tree/main/amd/single_gpu_vm_vs_bare-metal).

## References

-* [vLLM V1 Meets AMD Instinct GPUs: A New Era for LLM Inference Performance :material-arrow-top-right-thin:{ .external }](https://rocm.blogs.amd.com/software-tools-optimization/vllmv1-rocm-llm/README.html){:target="_blank"}

+* [vLLM V1 Meets AMD Instinct GPUs: A New Era for LLM Inference Performance](https://rocm.blogs.amd.com/software-tools-optimization/vllmv1-rocm-llm/README.html)

## What's next?

@@ -215,5 +215,5 @@ Our next steps are to benchmark VM vs. bare-metal performance in multi-GPU and m

#### Hot Aisle

-Big thanks to [Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"} for providing the compute power behind these benchmarks.

+Big thanks to [Hot Aisle](https://hotaisle.xyz/) for providing the compute power behind these benchmarks.

If you’re looking for fast AMD GPU bare-metal or VM instances, they’re definitely worth checking out.

diff --git a/docs/blog/posts/benchmarking-pd-ratios.md b/docs/blog/posts/benchmarking-pd-ratios.md

index 1069c5794..c303163e1 100644

--- a/docs/blog/posts/benchmarking-pd-ratios.md

+++ b/docs/blog/posts/benchmarking-pd-ratios.md

@@ -21,19 +21,19 @@ We evaluate different ratios across workload profiles and concurrency levels to

### What is Prefill–Decode disaggregation?

-LLM inference has two distinct phases: prefill and decode. Prefill processes all prompt tokens in parallel and is compute-intensive. Decode generates tokens one by one, repeatedly accessing the KV-cache, making it memory- and bandwidth-intensive. DistServe ([Zhong et al., 2024 :material-arrow-top-right-thin:{ .external }](https://arxiv.org/pdf/2401.09670){:target="_blank"}) introduced prefill–decode disaggregation to separate these phases across dedicated workers, reducing interference and enabling hardware to be allocated more efficiently.

+LLM inference has two distinct phases: prefill and decode. Prefill processes all prompt tokens in parallel and is compute-intensive. Decode generates tokens one by one, repeatedly accessing the KV-cache, making it memory- and bandwidth-intensive. DistServe ([Zhong et al., 2024](https://arxiv.org/pdf/2401.09670)) introduced prefill–decode disaggregation to separate these phases across dedicated workers, reducing interference and enabling hardware to be allocated more efficiently.

### What is the prefill–decode ratio?

The ratio of prefill to decode workers determines how much capacity is dedicated to each phase. DistServe showed that for a workload with ISL=512 and OSL=64, a 2:1 ratio met both TTFT and TPOT targets. But this example does not answer how the ratio should be chosen more generally, or whether it needs to change at runtime.

!!! info "Reasoning model example"

- In the DeepSeek deployment ([LMSYS, 2025 :material-arrow-top-right-thin:{ .external }](https://lmsys.org/blog/2025-05-05-large-scale-ep){:target="_blank"}), the ratio was 1:3. This decode-leaning split reflects reasoning workloads, where long outputs dominate. Allocating more workers to decode reduces inter-token latency and keeps responses streaming smoothly.

+ In the DeepSeek deployment ([LMSYS, 2025](https://lmsys.org/blog/2025-05-05-large-scale-ep)), the ratio was 1:3. This decode-leaning split reflects reasoning workloads, where long outputs dominate. Allocating more workers to decode reduces inter-token latency and keeps responses streaming smoothly.

### Dynamic ratio

-Dynamic approaches, such as NVIDIA’s [SLA-based :material-arrow-top-right-thin:{ .external }](https://docs.nvidia.com/dynamo/latest/architecture/sla_planner.html){:target="_blank"}

-and [Load-based :material-arrow-top-right-thin:{ .external }](https://docs.nvidia.com/dynamo/latest/architecture/load_planner.html){:target="_blank"} planners, adjust the ratio at runtime according to SLO targets or load. However, they do this in conjunction with auto-scaling, which increases orchestration complexity. This raises the question: does the prefill–decode ratio really need to be dynamic, or can a fixed ratio be chosen ahead of time and still provide robust performance?

+Dynamic approaches, such as NVIDIA’s [SLA-based](https://docs.nvidia.com/dynamo/latest/architecture/sla_planner.html)

+and [Load-based](https://docs.nvidia.com/dynamo/latest/architecture/load_planner.html) planners, adjust the ratio at runtime according to SLO targets or load. However, they do this in conjunction with auto-scaling, which increases orchestration complexity. This raises the question: does the prefill–decode ratio really need to be dynamic, or can a fixed ratio be chosen ahead of time and still provide robust performance?

## Benchmark purpose

@@ -72,7 +72,7 @@ If a fixed ratio consistently performs well across these metrics, it would indic

* **Model**: `openai/gpt-oss-120b`

* **Backend**: SGLang

-For full steps and raw data, see the [GitHub repo :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/benchmarks/tree/main/comparison/pd_ratio){:target="_blank"}.

+For full steps and raw data, see the [GitHub repo](https://github.com/dstackai/benchmarks/tree/main/comparison/pd_ratio).

## Finding 1: Prefill-heavy workloads

@@ -134,8 +134,8 @@ Overall, more study on how the optimal ratio is found and what factors it depend

## References

-* [DistServe :material-arrow-top-right-thin:{ .external }](https://arxiv.org/pdf/2401.09670){:target="_blank"}

-* [DeepSeek deployment on 96 H100 GPUs :material-arrow-top-right-thin:{ .external }](https://lmsys.org/blog/2025-05-05-large-scale-ep/){:target="_blank"}

-* [Dynamo disaggregated serving :material-arrow-top-right-thin:{ .external }](https://docs.nvidia.com/dynamo/latest/architecture/disagg_serving.html#){:target="_blank"}

-* [SGLang PD disaggregation :material-arrow-top-right-thin:{ .external }](https://docs.sglang.ai/advanced_features/pd_disaggregation.html){:target="_blank"}

-* [vLLM disaggregated prefilling :material-arrow-top-right-thin:{ .external }](https://docs.vllm.ai/en/v0.9.2/features/disagg_prefill.html){:target="_blank"}

+* [DistServe](https://arxiv.org/pdf/2401.09670)

+* [DeepSeek deployment on 96 H100 GPUs](https://lmsys.org/blog/2025-05-05-large-scale-ep/)

+* [Dynamo disaggregated serving](https://docs.nvidia.com/dynamo/latest/architecture/disagg_serving.html#)

+* [SGLang PD disaggregation](https://docs.sglang.ai/advanced_features/pd_disaggregation.html)

+* [vLLM disaggregated prefilling](https://docs.vllm.ai/en/v0.9.2/features/disagg_prefill.html)

diff --git a/docs/blog/posts/beyond-kubernetes-2024-recap-and-whats-ahead.md b/docs/blog/posts/beyond-kubernetes-2024-recap-and-whats-ahead.md

index dec4945ed..4c6b43f9b 100644

--- a/docs/blog/posts/beyond-kubernetes-2024-recap-and-whats-ahead.md

+++ b/docs/blog/posts/beyond-kubernetes-2024-recap-and-whats-ahead.md

@@ -21,25 +21,25 @@ As 2024 comes to a close, we reflect on the milestones we've achieved and look a

While `dstack` integrates with leading cloud GPU providers, we aim to expand partnerships with more providers

sharing our vision of simplifying AI infrastructure orchestration with a lightweight, efficient alternative to Kubernetes.

-This year, we’re excited to welcome our first partners: [Lambda :material-arrow-top-right-thin:{ .external }](https://lambdalabs.com/){:target="_blank"},

-[RunPod :material-arrow-top-right-thin:{ .external }](https://www.runpod.io/){:target="_blank"},

-[CUDO Compute :material-arrow-top-right-thin:{ .external }](https://www.cudocompute.com/){:target="_blank"},

-and [Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"}.

+This year, we’re excited to welcome our first partners: [Lambda](https://lambdalabs.com/),

+[RunPod](https://www.runpod.io/),

+[CUDO Compute](https://www.cudocompute.com/),

+and [Hot Aisle](https://hotaisle.xyz/).

-We’d also like to thank [Oracle :material-arrow-top-right-thin:{ .external }](https://www.oracle.com/cloud/){:target="_blank"}

+We’d also like to thank [Oracle ](https://www.oracle.com/cloud/)

for their collaboration, ensuring seamless integration between `dstack` and OCI.

-> Special thanks to [Lambda :material-arrow-top-right-thin:{ .external }](https://lambdalabs.com/){:target="_blank"} and

-> [Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"} for providing NVIDIA and AMD hardware, enabling us conducting

+> Special thanks to [Lambda](https://lambdalabs.com/) and

+> [Hot Aisle](https://hotaisle.xyz/) for providing NVIDIA and AMD hardware, enabling us conducting

> [benchmarks](/blog/category/benchmarks/), which

> are essential to advancing open-source inference and training stacks for all accelerator chips.

## Community

Thanks to your support, the project has

-reached [1.6K stars on GitHub :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack){:target="_blank"},

+reached [1.6K stars on GitHub](https://github.com/dstackai/dstack),

reflecting the growing interest and trust in its mission.

-Your issues, pull requests, as well as feedback on [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"}, play a

+Your issues, pull requests, as well as feedback on [Discord](https://discord.gg/u8SmfwPpMd), play a

critical role in the project's development.

## Fleets

@@ -87,7 +87,7 @@ This turns your on-prem cluster into a `dstack` fleet, ready to run dev environm

### GPU blocks

At `dstack`, when running a job on an instance, it uses all available GPUs on that instance. In Q1 2025, we will

-introduce [GPU blocks :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/issues/1780){:target="_blank"},

+introduce [GPU blocks](https://github.com/dstackai/dstack/issues/1780),

allowing the allocation of instance GPUs into discrete blocks that can be reused by concurrent jobs.

This will enable more cost-efficient utilization of expensive instances.

@@ -112,16 +112,16 @@ for model deployment, and we continue to enhance support for the rest of NVIDIA'

This year, we’re particularly proud of our newly added integration with AMD.

`dstack` works seamlessly with any on-prem AMD clusters. For example, you can rent such servers through our partner

-[Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"}.

+[Hot Aisle](https://hotaisle.xyz/).

-> Among cloud providers, [AMD :material-arrow-top-right-thin:{ .external }](https://www.amd.com/en/products/accelerators/instinct.html){:target="_blank"} is supported only through RunPod. In Q1 2025, we plan to extend it to

-[Nscale :material-arrow-top-right-thin:{ .external }](https://www.nscale.com/){:target="_blank"},

-> [Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"}, and potentially other providers open to collaboration.

+> Among cloud providers, [AMD](https://www.amd.com/en/products/accelerators/instinct.html) is supported only through RunPod. In Q1 2025, we plan to extend it to

+[Nscale](https://www.nscale.com/),

+> [Hot Aisle](https://hotaisle.xyz/), and potentially other providers open to collaboration.

### Intel

In Q1 2025, our roadmap includes added integration with

-[Intel Gaudi :material-arrow-top-right-thin:{ .external }](https://www.intel.com/content/www/us/en/products/details/processors/ai-accelerators/gaudi-overview.html){:target="_blank"}

+[Intel Gaudi](https://www.intel.com/content/www/us/en/products/details/processors/ai-accelerators/gaudi-overview.html)

among other accelerator chips.

## Join the community

diff --git a/docs/blog/posts/changelog-07-25.md b/docs/blog/posts/changelog-07-25.md

index 13bf67f54..909fa2859 100644

--- a/docs/blog/posts/changelog-07-25.md

+++ b/docs/blog/posts/changelog-07-25.md

@@ -112,7 +112,7 @@ resources:

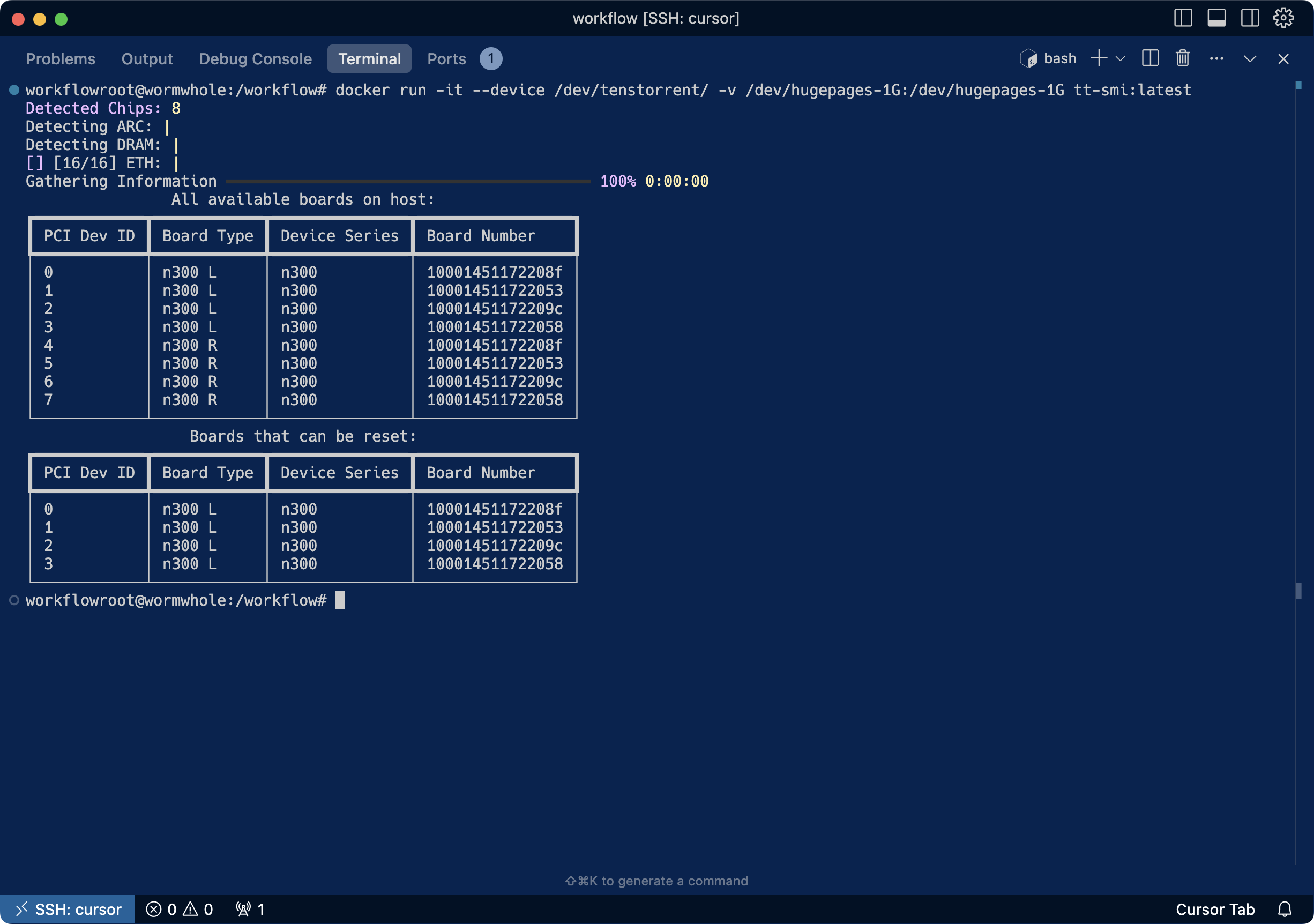

#### Tenstorrent

-`dstack` remains committed to supporting multiple GPU vendors—including NVIDIA, AMD, TPUs, and more recently, [Tenstorrent :material-arrow-top-right-thin:{ .external }](https://tenstorrent.com/){:target="_blank"}. The latest release improves Tenstorrent support by handling hosts with multiple N300 cards and adds Docker-in-Docker support.

+`dstack` remains committed to supporting multiple GPU vendors—including NVIDIA, AMD, TPUs, and more recently, [Tenstorrent](https://tenstorrent.com/). The latest release improves Tenstorrent support by handling hosts with multiple N300 cards and adds Docker-in-Docker support.

@@ -100,7 +100,7 @@ GPU partitioning is only practical if used dynamically—for instance, to run mu

#### Limitations

1. **Reproducibility**: AMD’s original blog post on partitioning lacked detailed setup information, so we had to reconstruct the benchmarks independently.

-2. **Network tuning**: These benchmarks were run on a default, out-of-the-box network configuration. Our results for RCCL (~339 GB/s) and RDMA (~726 Gbps) are slightly below the peak figures [reported by Dell :material-arrow-top-right-thin:{ .external }](https://infohub.delltechnologies.com/en-us/l/generative-ai-in-the-enterprise-with-amd-accelerators/rccl-and-perftest-for-cluster-validation-1/4/){:target="_blank"}. This suggests that further performance could be unlocked with expert tuning of network topology, MTU size, and NCCL environment variables.

+2. **Network tuning**: These benchmarks were run on a default, out-of-the-box network configuration. Our results for RCCL (~339 GB/s) and RDMA (~726 Gbps) are slightly below the peak figures [reported by Dell](https://infohub.delltechnologies.com/en-us/l/generative-ai-in-the-enterprise-with-amd-accelerators/rccl-and-perftest-for-cluster-validation-1/4/). This suggests that further performance could be unlocked with expert tuning of network topology, MTU size, and NCCL environment variables.

## Benchmark setup

@@ -352,7 +352,7 @@ The `SIZE` value is `1M`, `2M`, .., `8G`.

**vLLM data parallel**

-1. Build nginx container (see [vLLM-nginx :material-arrow-top-right-thin:{ .external }](https://docs.vllm.ai/en/stable/deployment/nginx.html#build-nginx-container){:target="_blank"}).

+1. Build nginx container (see [vLLM-nginx](https://docs.vllm.ai/en/stable/deployment/nginx.html#build-nginx-container)).

2. Create `nginx.conf`

@@ -471,13 +471,13 @@ HIP_VISIBLE_DEVICES=0 python3 toy_inference_benchmark.py \

## Source code

-All source code and findings are available in [our GitHub repo :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/benchmarks/tree/main/amd/baremetal_container_partition){:target="_blank"}.

+All source code and findings are available in [our GitHub repo](https://github.com/dstackai/benchmarks/tree/main/amd/baremetal_container_partition).

## References

-* [AMD Instinct MI300X GPU partitioning overview :material-arrow-top-right-thin:{ .external }](https://instinct.docs.amd.com/projects/amdgpu-docs/en/latest/gpu-partitioning/mi300x/overview.html){:target="_blank"}

-* [Deep dive into partition modes by AMD :material-arrow-top-right-thin:{ .external }](https://rocm.blogs.amd.com/software-tools-optimization/compute-memory-modes/README.html){:target="_blank"}.

-* [RCCL and PerfTest for cluster validation by Dell :material-arrow-top-right-thin:{ .external }](https://infohub.delltechnologies.com/en-us/l/generative-ai-in-the-enterprise-with-amd-accelerators/rccl-and-perftest-for-cluster-validation-1/4/){:target="_blank"}.

+* [AMD Instinct MI300X GPU partitioning overview](https://instinct.docs.amd.com/projects/amdgpu-docs/en/latest/gpu-partitioning/mi300x/overview.html)

+* [Deep dive into partition modes by AMD](https://rocm.blogs.amd.com/software-tools-optimization/compute-memory-modes/README.html).

+* [RCCL and PerfTest for cluster validation by Dell](https://infohub.delltechnologies.com/en-us/l/generative-ai-in-the-enterprise-with-amd-accelerators/rccl-and-perftest-for-cluster-validation-1/4/).

## What's next?

@@ -487,5 +487,5 @@ Benchmark the performance impact of VMs vs bare-metal for inference and training

#### Hot Aisle

-Big thanks to [Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"} for providing the compute power behind these benchmarks.

+Big thanks to [Hot Aisle](https://hotaisle.xyz/) for providing the compute power behind these benchmarks.

If you’re looking for fast AMD GPU bare-metal or VM instances, they’re definitely worth checking out.

diff --git a/docs/blog/posts/benchmark-amd-vms.md b/docs/blog/posts/benchmark-amd-vms.md

index 099fee9be..b8d9105d0 100644

--- a/docs/blog/posts/benchmark-amd-vms.md

+++ b/docs/blog/posts/benchmark-amd-vms.md

@@ -18,7 +18,7 @@ This is the first in our series of benchmarks exploring the performance of AMD G

Our findings reveal that for single-GPU LLM training and inference, both setups deliver comparable performance. The subtle differences we observed highlight how virtualization overhead can influence performance under specific conditions, but for most practical purposes, the performance is nearly identical.

-This benchmark was supported by [Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"},

+This benchmark was supported by [Hot Aisle](https://hotaisle.xyz/),

a provider of AMD GPU bare-metal and VM infrastructure.

## Benchmark 1: Inference

@@ -201,11 +201,11 @@ python3 trl/scripts/sft.py \

## Source code

-All source code and findings are available in our [GitHub repo :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/benchmarks/tree/main/amd/single_gpu_vm_vs_bare-metal){:target="_blank"}.

+All source code and findings are available in our [GitHub repo](https://github.com/dstackai/benchmarks/tree/main/amd/single_gpu_vm_vs_bare-metal).

## References

-* [vLLM V1 Meets AMD Instinct GPUs: A New Era for LLM Inference Performance :material-arrow-top-right-thin:{ .external }](https://rocm.blogs.amd.com/software-tools-optimization/vllmv1-rocm-llm/README.html){:target="_blank"}

+* [vLLM V1 Meets AMD Instinct GPUs: A New Era for LLM Inference Performance](https://rocm.blogs.amd.com/software-tools-optimization/vllmv1-rocm-llm/README.html)

## What's next?

@@ -215,5 +215,5 @@ Our next steps are to benchmark VM vs. bare-metal performance in multi-GPU and m

#### Hot Aisle

-Big thanks to [Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"} for providing the compute power behind these benchmarks.

+Big thanks to [Hot Aisle](https://hotaisle.xyz/) for providing the compute power behind these benchmarks.

If you’re looking for fast AMD GPU bare-metal or VM instances, they’re definitely worth checking out.

diff --git a/docs/blog/posts/benchmarking-pd-ratios.md b/docs/blog/posts/benchmarking-pd-ratios.md

index 1069c5794..c303163e1 100644

--- a/docs/blog/posts/benchmarking-pd-ratios.md

+++ b/docs/blog/posts/benchmarking-pd-ratios.md

@@ -21,19 +21,19 @@ We evaluate different ratios across workload profiles and concurrency levels to

### What is Prefill–Decode disaggregation?

-LLM inference has two distinct phases: prefill and decode. Prefill processes all prompt tokens in parallel and is compute-intensive. Decode generates tokens one by one, repeatedly accessing the KV-cache, making it memory- and bandwidth-intensive. DistServe ([Zhong et al., 2024 :material-arrow-top-right-thin:{ .external }](https://arxiv.org/pdf/2401.09670){:target="_blank"}) introduced prefill–decode disaggregation to separate these phases across dedicated workers, reducing interference and enabling hardware to be allocated more efficiently.

+LLM inference has two distinct phases: prefill and decode. Prefill processes all prompt tokens in parallel and is compute-intensive. Decode generates tokens one by one, repeatedly accessing the KV-cache, making it memory- and bandwidth-intensive. DistServe ([Zhong et al., 2024](https://arxiv.org/pdf/2401.09670)) introduced prefill–decode disaggregation to separate these phases across dedicated workers, reducing interference and enabling hardware to be allocated more efficiently.

### What is the prefill–decode ratio?

The ratio of prefill to decode workers determines how much capacity is dedicated to each phase. DistServe showed that for a workload with ISL=512 and OSL=64, a 2:1 ratio met both TTFT and TPOT targets. But this example does not answer how the ratio should be chosen more generally, or whether it needs to change at runtime.

!!! info "Reasoning model example"

- In the DeepSeek deployment ([LMSYS, 2025 :material-arrow-top-right-thin:{ .external }](https://lmsys.org/blog/2025-05-05-large-scale-ep){:target="_blank"}), the ratio was 1:3. This decode-leaning split reflects reasoning workloads, where long outputs dominate. Allocating more workers to decode reduces inter-token latency and keeps responses streaming smoothly.

+ In the DeepSeek deployment ([LMSYS, 2025](https://lmsys.org/blog/2025-05-05-large-scale-ep)), the ratio was 1:3. This decode-leaning split reflects reasoning workloads, where long outputs dominate. Allocating more workers to decode reduces inter-token latency and keeps responses streaming smoothly.

### Dynamic ratio

-Dynamic approaches, such as NVIDIA’s [SLA-based :material-arrow-top-right-thin:{ .external }](https://docs.nvidia.com/dynamo/latest/architecture/sla_planner.html){:target="_blank"}

-and [Load-based :material-arrow-top-right-thin:{ .external }](https://docs.nvidia.com/dynamo/latest/architecture/load_planner.html){:target="_blank"} planners, adjust the ratio at runtime according to SLO targets or load. However, they do this in conjunction with auto-scaling, which increases orchestration complexity. This raises the question: does the prefill–decode ratio really need to be dynamic, or can a fixed ratio be chosen ahead of time and still provide robust performance?

+Dynamic approaches, such as NVIDIA’s [SLA-based](https://docs.nvidia.com/dynamo/latest/architecture/sla_planner.html)

+and [Load-based](https://docs.nvidia.com/dynamo/latest/architecture/load_planner.html) planners, adjust the ratio at runtime according to SLO targets or load. However, they do this in conjunction with auto-scaling, which increases orchestration complexity. This raises the question: does the prefill–decode ratio really need to be dynamic, or can a fixed ratio be chosen ahead of time and still provide robust performance?

## Benchmark purpose

@@ -72,7 +72,7 @@ If a fixed ratio consistently performs well across these metrics, it would indic

* **Model**: `openai/gpt-oss-120b`

* **Backend**: SGLang

-For full steps and raw data, see the [GitHub repo :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/benchmarks/tree/main/comparison/pd_ratio){:target="_blank"}.

+For full steps and raw data, see the [GitHub repo](https://github.com/dstackai/benchmarks/tree/main/comparison/pd_ratio).

## Finding 1: Prefill-heavy workloads

@@ -134,8 +134,8 @@ Overall, more study on how the optimal ratio is found and what factors it depend

## References

-* [DistServe :material-arrow-top-right-thin:{ .external }](https://arxiv.org/pdf/2401.09670){:target="_blank"}

-* [DeepSeek deployment on 96 H100 GPUs :material-arrow-top-right-thin:{ .external }](https://lmsys.org/blog/2025-05-05-large-scale-ep/){:target="_blank"}

-* [Dynamo disaggregated serving :material-arrow-top-right-thin:{ .external }](https://docs.nvidia.com/dynamo/latest/architecture/disagg_serving.html#){:target="_blank"}

-* [SGLang PD disaggregation :material-arrow-top-right-thin:{ .external }](https://docs.sglang.ai/advanced_features/pd_disaggregation.html){:target="_blank"}

-* [vLLM disaggregated prefilling :material-arrow-top-right-thin:{ .external }](https://docs.vllm.ai/en/v0.9.2/features/disagg_prefill.html){:target="_blank"}

+* [DistServe](https://arxiv.org/pdf/2401.09670)

+* [DeepSeek deployment on 96 H100 GPUs](https://lmsys.org/blog/2025-05-05-large-scale-ep/)

+* [Dynamo disaggregated serving](https://docs.nvidia.com/dynamo/latest/architecture/disagg_serving.html#)

+* [SGLang PD disaggregation](https://docs.sglang.ai/advanced_features/pd_disaggregation.html)

+* [vLLM disaggregated prefilling](https://docs.vllm.ai/en/v0.9.2/features/disagg_prefill.html)

diff --git a/docs/blog/posts/beyond-kubernetes-2024-recap-and-whats-ahead.md b/docs/blog/posts/beyond-kubernetes-2024-recap-and-whats-ahead.md

index dec4945ed..4c6b43f9b 100644

--- a/docs/blog/posts/beyond-kubernetes-2024-recap-and-whats-ahead.md

+++ b/docs/blog/posts/beyond-kubernetes-2024-recap-and-whats-ahead.md

@@ -21,25 +21,25 @@ As 2024 comes to a close, we reflect on the milestones we've achieved and look a

While `dstack` integrates with leading cloud GPU providers, we aim to expand partnerships with more providers

sharing our vision of simplifying AI infrastructure orchestration with a lightweight, efficient alternative to Kubernetes.

-This year, we’re excited to welcome our first partners: [Lambda :material-arrow-top-right-thin:{ .external }](https://lambdalabs.com/){:target="_blank"},

-[RunPod :material-arrow-top-right-thin:{ .external }](https://www.runpod.io/){:target="_blank"},

-[CUDO Compute :material-arrow-top-right-thin:{ .external }](https://www.cudocompute.com/){:target="_blank"},

-and [Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"}.

+This year, we’re excited to welcome our first partners: [Lambda](https://lambdalabs.com/),

+[RunPod](https://www.runpod.io/),

+[CUDO Compute](https://www.cudocompute.com/),

+and [Hot Aisle](https://hotaisle.xyz/).

-We’d also like to thank [Oracle :material-arrow-top-right-thin:{ .external }](https://www.oracle.com/cloud/){:target="_blank"}

+We’d also like to thank [Oracle ](https://www.oracle.com/cloud/)

for their collaboration, ensuring seamless integration between `dstack` and OCI.

-> Special thanks to [Lambda :material-arrow-top-right-thin:{ .external }](https://lambdalabs.com/){:target="_blank"} and

-> [Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"} for providing NVIDIA and AMD hardware, enabling us conducting

+> Special thanks to [Lambda](https://lambdalabs.com/) and

+> [Hot Aisle](https://hotaisle.xyz/) for providing NVIDIA and AMD hardware, enabling us conducting

> [benchmarks](/blog/category/benchmarks/), which

> are essential to advancing open-source inference and training stacks for all accelerator chips.

## Community

Thanks to your support, the project has

-reached [1.6K stars on GitHub :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack){:target="_blank"},

+reached [1.6K stars on GitHub](https://github.com/dstackai/dstack),

reflecting the growing interest and trust in its mission.

-Your issues, pull requests, as well as feedback on [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"}, play a

+Your issues, pull requests, as well as feedback on [Discord](https://discord.gg/u8SmfwPpMd), play a

critical role in the project's development.

## Fleets

@@ -87,7 +87,7 @@ This turns your on-prem cluster into a `dstack` fleet, ready to run dev environm

### GPU blocks

At `dstack`, when running a job on an instance, it uses all available GPUs on that instance. In Q1 2025, we will

-introduce [GPU blocks :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/issues/1780){:target="_blank"},

+introduce [GPU blocks](https://github.com/dstackai/dstack/issues/1780),

allowing the allocation of instance GPUs into discrete blocks that can be reused by concurrent jobs.

This will enable more cost-efficient utilization of expensive instances.

@@ -112,16 +112,16 @@ for model deployment, and we continue to enhance support for the rest of NVIDIA'

This year, we’re particularly proud of our newly added integration with AMD.

`dstack` works seamlessly with any on-prem AMD clusters. For example, you can rent such servers through our partner

-[Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"}.

+[Hot Aisle](https://hotaisle.xyz/).

-> Among cloud providers, [AMD :material-arrow-top-right-thin:{ .external }](https://www.amd.com/en/products/accelerators/instinct.html){:target="_blank"} is supported only through RunPod. In Q1 2025, we plan to extend it to

-[Nscale :material-arrow-top-right-thin:{ .external }](https://www.nscale.com/){:target="_blank"},

-> [Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"}, and potentially other providers open to collaboration.

+> Among cloud providers, [AMD](https://www.amd.com/en/products/accelerators/instinct.html) is supported only through RunPod. In Q1 2025, we plan to extend it to

+[Nscale](https://www.nscale.com/),

+> [Hot Aisle](https://hotaisle.xyz/), and potentially other providers open to collaboration.

### Intel

In Q1 2025, our roadmap includes added integration with

-[Intel Gaudi :material-arrow-top-right-thin:{ .external }](https://www.intel.com/content/www/us/en/products/details/processors/ai-accelerators/gaudi-overview.html){:target="_blank"}

+[Intel Gaudi](https://www.intel.com/content/www/us/en/products/details/processors/ai-accelerators/gaudi-overview.html)

among other accelerator chips.

## Join the community

diff --git a/docs/blog/posts/changelog-07-25.md b/docs/blog/posts/changelog-07-25.md

index 13bf67f54..909fa2859 100644

--- a/docs/blog/posts/changelog-07-25.md

+++ b/docs/blog/posts/changelog-07-25.md

@@ -112,7 +112,7 @@ resources:

#### Tenstorrent

-`dstack` remains committed to supporting multiple GPU vendors—including NVIDIA, AMD, TPUs, and more recently, [Tenstorrent :material-arrow-top-right-thin:{ .external }](https://tenstorrent.com/){:target="_blank"}. The latest release improves Tenstorrent support by handling hosts with multiple N300 cards and adds Docker-in-Docker support.

+`dstack` remains committed to supporting multiple GPU vendors—including NVIDIA, AMD, TPUs, and more recently, [Tenstorrent](https://tenstorrent.com/). The latest release improves Tenstorrent support by handling hosts with multiple N300 cards and adds Docker-in-Docker support.

@@ -192,7 +192,7 @@ Server-side performance has been improved. With optimized handling and backgroun

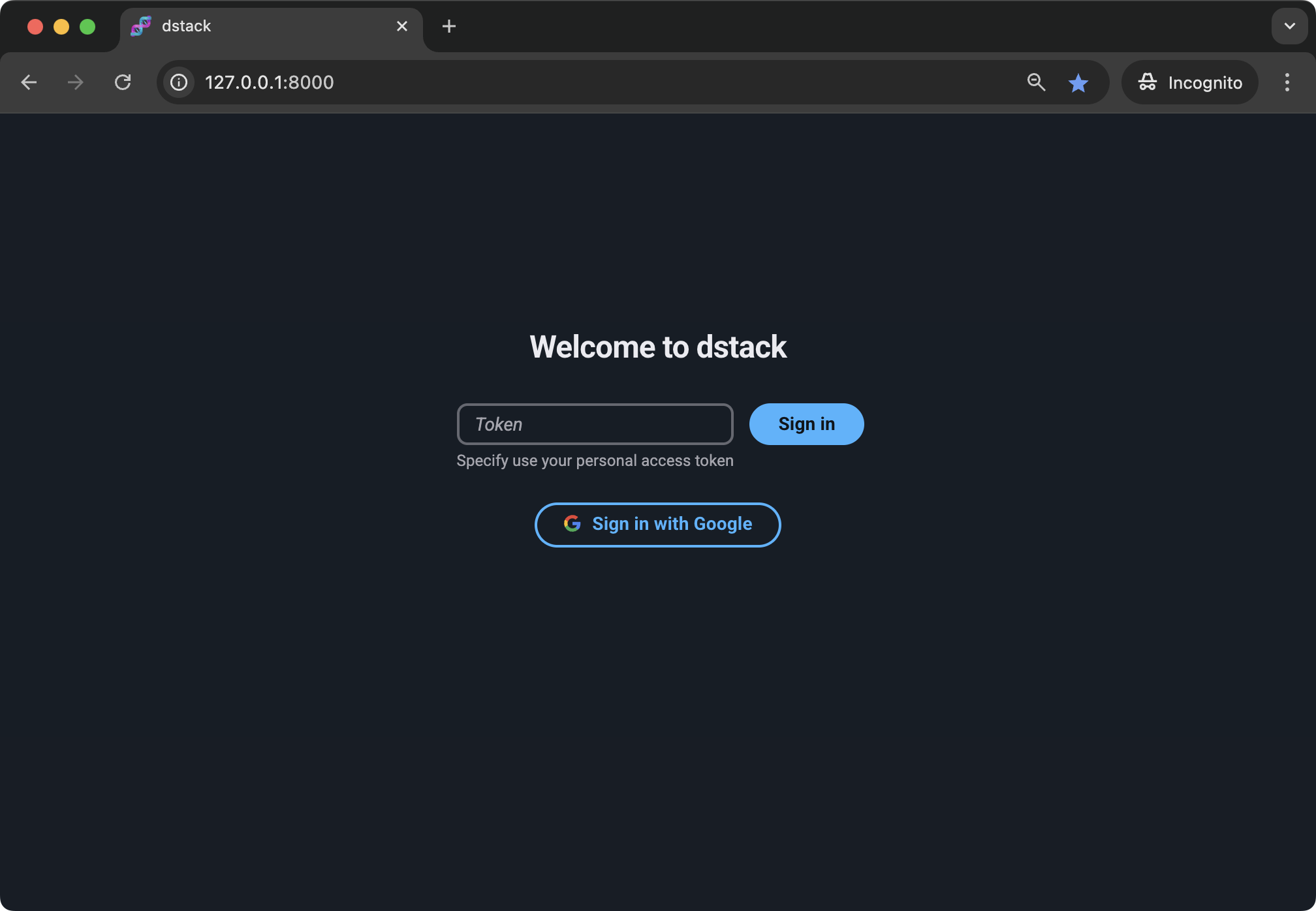

#### Google SSO

-Alongside the open-source version, `dstack` also offers [dstack Enterprise :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack-enterprise){:target="_blank"} — which adds dedicated support and extra integrations like Single Sign-On (SSO). The latest release introduces support for configuring your company’s Google account for authentication.

+Alongside the open-source version, `dstack` also offers [dstack Enterprise](https://github.com/dstackai/dstack-enterprise) — which adds dedicated support and extra integrations like Single Sign-On (SSO). The latest release introduces support for configuring your company’s Google account for authentication.

@@ -192,7 +192,7 @@ Server-side performance has been improved. With optimized handling and backgroun

#### Google SSO

-Alongside the open-source version, `dstack` also offers [dstack Enterprise :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack-enterprise){:target="_blank"} — which adds dedicated support and extra integrations like Single Sign-On (SSO). The latest release introduces support for configuring your company’s Google account for authentication.

+Alongside the open-source version, `dstack` also offers [dstack Enterprise](https://github.com/dstackai/dstack-enterprise) — which adds dedicated support and extra integrations like Single Sign-On (SSO). The latest release introduces support for configuring your company’s Google account for authentication.

@@ -201,4 +201,4 @@ If you’d like to learn more about `dstack` Enterprise, [let us know](https://c

That’s all for now.

!!! info "What's next?"

- Give dstack a try, and share your feedback—whether it’s [GitHub :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack){:target="_blank"} issues, PRs, or questions on [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"}. We’re eager to hear from you!

+ Give dstack a try, and share your feedback—whether it’s [GitHub](https://github.com/dstackai/dstack) issues, PRs, or questions on [Discord](https://discord.gg/u8SmfwPpMd). We’re eager to hear from you!

diff --git a/docs/blog/posts/cursor.md b/docs/blog/posts/cursor.md

index a5f960469..4e8e01fb4 100644

--- a/docs/blog/posts/cursor.md

+++ b/docs/blog/posts/cursor.md

@@ -15,7 +15,7 @@ automatic repository fetching, and streamlined access via SSH or a preferred des

Previously, support was limited to VS Code. However, as developers rely on a variety of desktop IDEs,

we’ve expanded compatibility. With this update, dev environments now offer effortless access for users of

-[Cursor :material-arrow-top-right-thin:{ .external }](https://www.cursor.com/){:target="_blank"}.

+[Cursor](https://www.cursor.com/).

@@ -201,4 +201,4 @@ If you’d like to learn more about `dstack` Enterprise, [let us know](https://c

That’s all for now.

!!! info "What's next?"

- Give dstack a try, and share your feedback—whether it’s [GitHub :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack){:target="_blank"} issues, PRs, or questions on [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"}. We’re eager to hear from you!

+ Give dstack a try, and share your feedback—whether it’s [GitHub](https://github.com/dstackai/dstack) issues, PRs, or questions on [Discord](https://discord.gg/u8SmfwPpMd). We’re eager to hear from you!

diff --git a/docs/blog/posts/cursor.md b/docs/blog/posts/cursor.md

index a5f960469..4e8e01fb4 100644

--- a/docs/blog/posts/cursor.md

+++ b/docs/blog/posts/cursor.md

@@ -15,7 +15,7 @@ automatic repository fetching, and streamlined access via SSH or a preferred des

Previously, support was limited to VS Code. However, as developers rely on a variety of desktop IDEs,

we’ve expanded compatibility. With this update, dev environments now offer effortless access for users of

-[Cursor :material-arrow-top-right-thin:{ .external }](https://www.cursor.com/){:target="_blank"}.

+[Cursor](https://www.cursor.com/).

@@ -79,8 +79,8 @@ Using Cursor over VS Code offers multiple benefits, particularly when it comes t

enhanced developer experience.

!!! info "What's next?"

- 1. [Download :material-arrow-top-right-thin:{ .external }](https://www.cursor.com/){:target="_blank"} and install Cursor

+ 1. [Download](https://www.cursor.com/) and install Cursor

2. Learn more about [dev environments](../../docs/concepts/dev-environments.md),

[tasks](../../docs/concepts/tasks.md), [services](../../docs/concepts/services.md),

and [fleets](../../docs/concepts/fleets.md)

- 2. Join [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"}

+ 2. Join [Discord](https://discord.gg/u8SmfwPpMd)

diff --git a/docs/blog/posts/digitalocean-and-amd-dev-cloud.md b/docs/blog/posts/digitalocean-and-amd-dev-cloud.md

index 4103e6e15..ce400899d 100644

--- a/docs/blog/posts/digitalocean-and-amd-dev-cloud.md

+++ b/docs/blog/posts/digitalocean-and-amd-dev-cloud.md

@@ -12,8 +12,8 @@ categories:

Orchestration automates provisioning, running jobs, and tearing them down. While Kubernetes and Slurm are powerful in their domains, they lack the lightweight, GPU-native focus modern teams need to move faster.

-`dstack` is built entirely around GPUs. Our latest update introduces native integration with [DigitalOcean :material-arrow-top-right-thin:{ .external }](https://www.digitalocean.com/products/gradient/gpu-droplets){:target="_blank"} and

-[AMD Developer Cloud :material-arrow-top-right-thin:{ .external }](https://www.amd.com/en/developer/resources/cloud-access/amd-developer-cloud.html){:target="_blank"}, enabling teams to provision cloud GPUs and run workloads more cost-efficiently.

+`dstack` is built entirely around GPUs. Our latest update introduces native integration with [DigitalOcean](https://www.digitalocean.com/products/gradient/gpu-droplets) and

+[AMD Developer Cloud](https://www.amd.com/en/developer/resources/cloud-access/amd-developer-cloud.html), enabling teams to provision cloud GPUs and run workloads more cost-efficiently.

@@ -79,8 +79,8 @@ Using Cursor over VS Code offers multiple benefits, particularly when it comes t

enhanced developer experience.

!!! info "What's next?"

- 1. [Download :material-arrow-top-right-thin:{ .external }](https://www.cursor.com/){:target="_blank"} and install Cursor

+ 1. [Download](https://www.cursor.com/) and install Cursor

2. Learn more about [dev environments](../../docs/concepts/dev-environments.md),

[tasks](../../docs/concepts/tasks.md), [services](../../docs/concepts/services.md),

and [fleets](../../docs/concepts/fleets.md)

- 2. Join [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"}

+ 2. Join [Discord](https://discord.gg/u8SmfwPpMd)

diff --git a/docs/blog/posts/digitalocean-and-amd-dev-cloud.md b/docs/blog/posts/digitalocean-and-amd-dev-cloud.md

index 4103e6e15..ce400899d 100644

--- a/docs/blog/posts/digitalocean-and-amd-dev-cloud.md

+++ b/docs/blog/posts/digitalocean-and-amd-dev-cloud.md

@@ -12,8 +12,8 @@ categories:

Orchestration automates provisioning, running jobs, and tearing them down. While Kubernetes and Slurm are powerful in their domains, they lack the lightweight, GPU-native focus modern teams need to move faster.

-`dstack` is built entirely around GPUs. Our latest update introduces native integration with [DigitalOcean :material-arrow-top-right-thin:{ .external }](https://www.digitalocean.com/products/gradient/gpu-droplets){:target="_blank"} and

-[AMD Developer Cloud :material-arrow-top-right-thin:{ .external }](https://www.amd.com/en/developer/resources/cloud-access/amd-developer-cloud.html){:target="_blank"}, enabling teams to provision cloud GPUs and run workloads more cost-efficiently.

+`dstack` is built entirely around GPUs. Our latest update introduces native integration with [DigitalOcean](https://www.digitalocean.com/products/gradient/gpu-droplets) and

+[AMD Developer Cloud](https://www.amd.com/en/developer/resources/cloud-access/amd-developer-cloud.html), enabling teams to provision cloud GPUs and run workloads more cost-efficiently.

@@ -143,9 +143,9 @@ $ dstack apply -f examples/models/gpt-oss/120b.dstack.yml

!!! info "What's next?"

1. Check [Quickstart](../../docs/quickstart.md)

- 2. Learn more about [DigitalOcean :material-arrow-top-right-thin:{ .external }](https://www.digitalocean.com/products/gradient/gpu-droplets){:target="_blank"} and

- [AMD Developer Cloud :material-arrow-top-right-thin:{ .external }](https://www.amd.com/en/developer/resources/cloud-access/amd-developer-cloud.html){:target="_blank"}

+ 2. Learn more about [DigitalOcean](https://www.digitalocean.com/products/gradient/gpu-droplets) and

+ [AMD Developer Cloud](https://www.amd.com/en/developer/resources/cloud-access/amd-developer-cloud.html)

3. Explore [dev environments](../../docs/concepts/dev-environments.md),

[tasks](../../docs/concepts/tasks.md), [services](../../docs/concepts/services.md),

and [fleets](../../docs/concepts/fleets.md)

- 4. Join [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"}

+ 4. Join [Discord](https://discord.gg/u8SmfwPpMd)

diff --git a/docs/blog/posts/docker-inside-containers.md b/docs/blog/posts/docker-inside-containers.md

index 13af39030..699e75fe7 100644

--- a/docs/blog/posts/docker-inside-containers.md

+++ b/docs/blog/posts/docker-inside-containers.md

@@ -94,12 +94,12 @@ Last but not least, you can, of course, use the `docker run` command, for exampl

## Examples

-A few examples of using this feature can be found in [`examples/misc/docker-compose` :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/blob/master/examples/misc/docker-compose){: target="_ blank"}.

+A few examples of using this feature can be found in [`examples/misc/docker-compose`](https://github.com/dstackai/dstack/blob/master/examples/misc/docker-compose).

## Feedback

If you find something not working as intended, please be sure to report it to

-our [bug tracker :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/issues){:target="_ blank"}.

+our [bug tracker](https://github.com/dstackai/dstack/issues){:target="_ blank"}.

Your feedback and feature requests are also very welcome on both

-[Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"} and the

-[issue tracker :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/issues){:target="_blank"}.

+[Discord](https://discord.gg/u8SmfwPpMd) and the

+[issue tracker](https://github.com/dstackai/dstack/issues).

diff --git a/docs/blog/posts/dstack-metrics.md b/docs/blog/posts/dstack-metrics.md

index f4647d782..4558cd0cc 100644

--- a/docs/blog/posts/dstack-metrics.md

+++ b/docs/blog/posts/dstack-metrics.md

@@ -63,7 +63,7 @@ Monitoring is a critical part of observability, and we have many more features o

## Feedback

If you find something not working as intended, please be sure to report it to

-our [bug tracker :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/issues){:target="_ blank"}.

+our [bug tracker](https://github.com/dstackai/dstack/issues){:target="_ blank"}.

Your feedback and feature requests are also very welcome on both

-[Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"} and the

-[issue tracker :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/issues){:target="_blank"}.

+[Discord](https://discord.gg/u8SmfwPpMd) and the

+[issue tracker](https://github.com/dstackai/dstack/issues).

diff --git a/docs/blog/posts/dstack-sky-own-cloud-accounts.md b/docs/blog/posts/dstack-sky-own-cloud-accounts.md

index 16b68867c..13c927a31 100644

--- a/docs/blog/posts/dstack-sky-own-cloud-accounts.md

+++ b/docs/blog/posts/dstack-sky-own-cloud-accounts.md

@@ -9,7 +9,7 @@ categories:

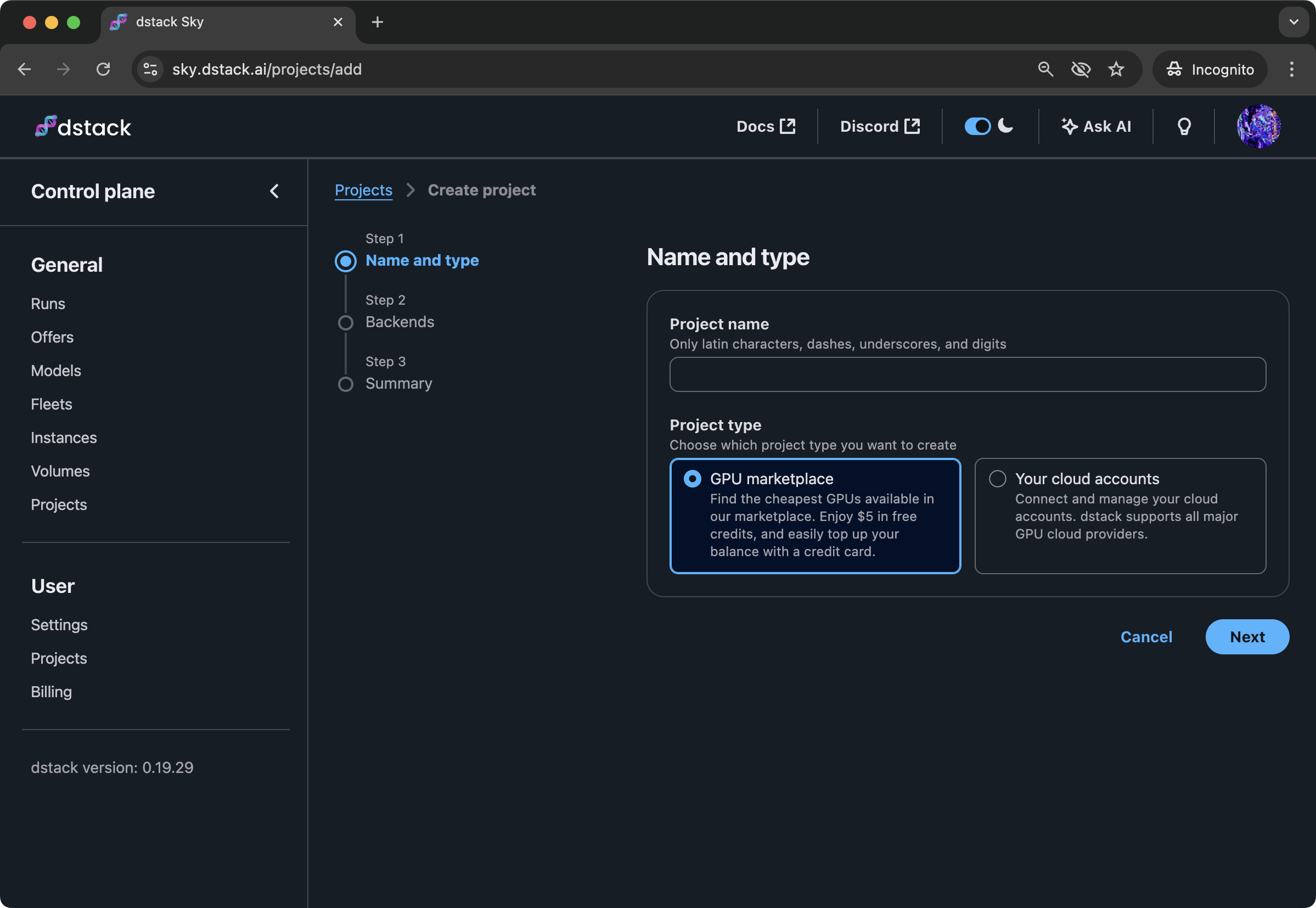

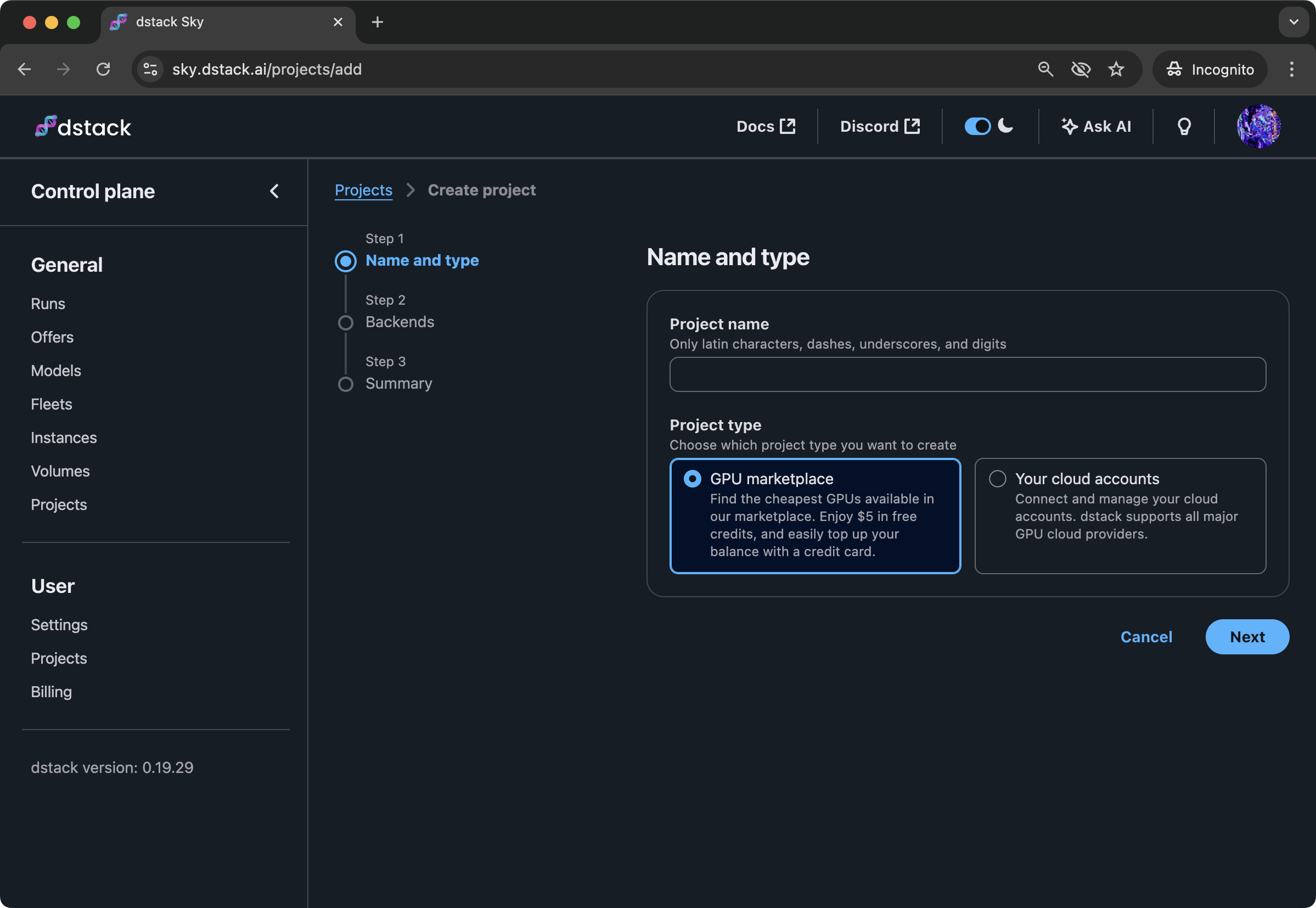

# dstack Sky now supports your own cloud accounts

-[dstack Sky :material-arrow-top-right-thin:{ .external }](https://sky.dstack.ai){:target="_blank"}

+[dstack Sky](https://sky.dstack.ai)

enables you to access GPUs from the global marketplace at the most

competitive rates. However, sometimes you may want to use your own cloud accounts.

With today's release, both options are now supported.

@@ -30,12 +30,12 @@ CUDO, RunPod, and Vast.ai.

Additionally, you can disable certain backends if you do not plan to use them.

Typically, if you prefer using your own cloud accounts, it's recommended that you use the

-[open-source version :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/){:target="_blank"} of `dstack`.

+[open-source version](https://github.com/dstackai/dstack/) of `dstack`.

However, if you prefer not to host it yourself, now you can use `dstack Sky`

with your own cloud accounts as well.

> Seeking the cheapest on-demand and spot cloud GPUs?

-> [dstack Sky :material-arrow-top-right-thin:{ .external }](https://sky.dstack.ai){:target="_blank"} has you covered!

+> [dstack Sky](https://sky.dstack.ai) has you covered!

Need help, have a question, or just want to stay updated?

diff --git a/docs/blog/posts/ea-gtc25.md b/docs/blog/posts/ea-gtc25.md

index 262554866..b7a28ba59 100644

--- a/docs/blog/posts/ea-gtc25.md

+++ b/docs/blog/posts/ea-gtc25.md

@@ -12,7 +12,7 @@ links:

# How EA uses dstack to fast-track AI development

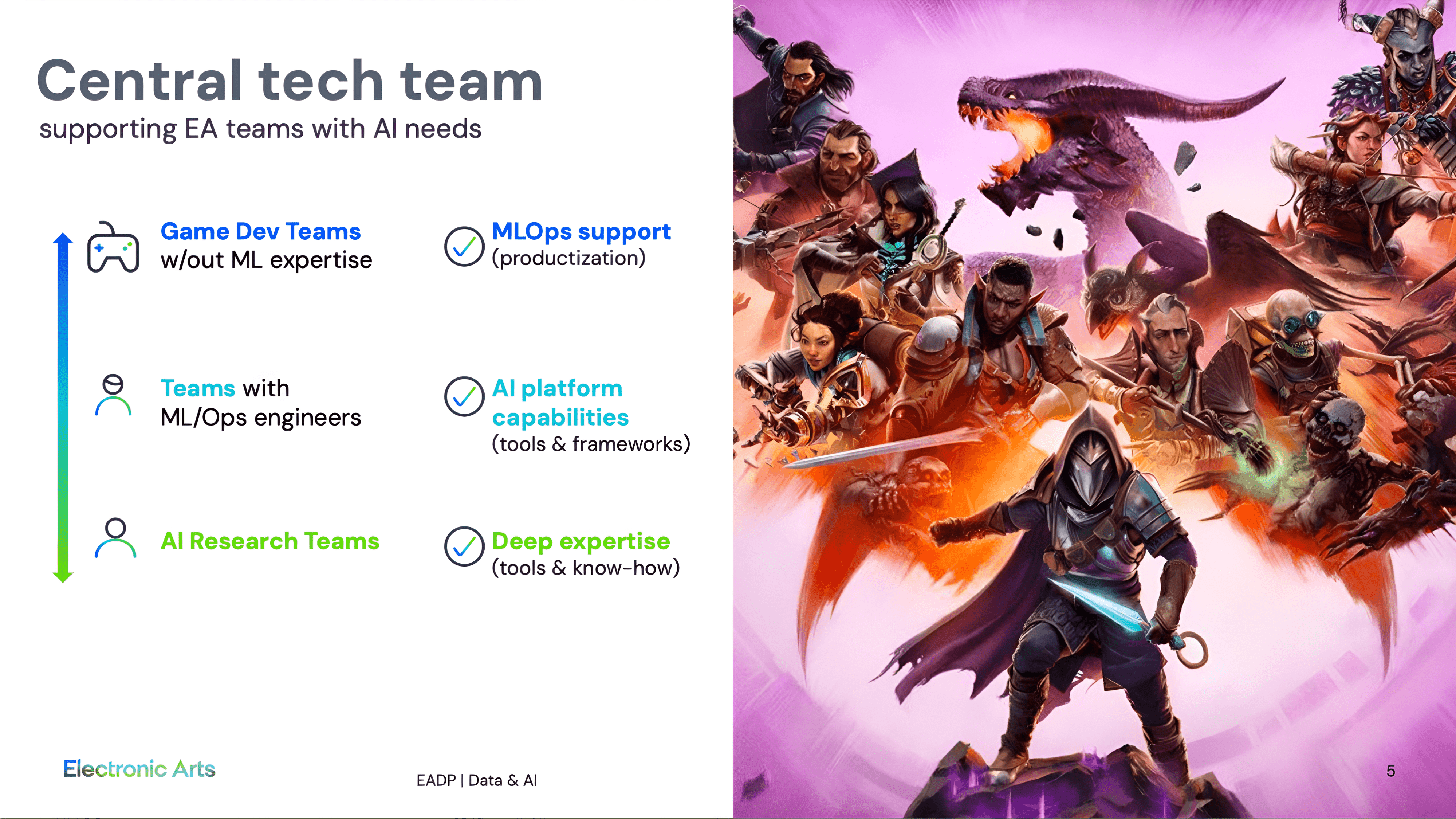

-At NVIDIA GTC 2025, Electronic Arts [shared :material-arrow-top-right-thin:{ .external }](https://www.nvidia.com/en-us/on-demand/session/gtc25-s73667/){:target="_blank"} how they’re scaling AI development and managing infrastructure across teams. They highlighted using tools like `dstack` to provision GPUs quickly, flexibly, and cost-efficiently. This case study summarizes key insights from their talk.

+At NVIDIA GTC 2025, Electronic Arts [shared](https://www.nvidia.com/en-us/on-demand/session/gtc25-s73667/) how they’re scaling AI development and managing infrastructure across teams. They highlighted using tools like `dstack` to provision GPUs quickly, flexibly, and cost-efficiently. This case study summarizes key insights from their talk.

@@ -143,9 +143,9 @@ $ dstack apply -f examples/models/gpt-oss/120b.dstack.yml

!!! info "What's next?"

1. Check [Quickstart](../../docs/quickstart.md)

- 2. Learn more about [DigitalOcean :material-arrow-top-right-thin:{ .external }](https://www.digitalocean.com/products/gradient/gpu-droplets){:target="_blank"} and

- [AMD Developer Cloud :material-arrow-top-right-thin:{ .external }](https://www.amd.com/en/developer/resources/cloud-access/amd-developer-cloud.html){:target="_blank"}

+ 2. Learn more about [DigitalOcean](https://www.digitalocean.com/products/gradient/gpu-droplets) and

+ [AMD Developer Cloud](https://www.amd.com/en/developer/resources/cloud-access/amd-developer-cloud.html)

3. Explore [dev environments](../../docs/concepts/dev-environments.md),

[tasks](../../docs/concepts/tasks.md), [services](../../docs/concepts/services.md),

and [fleets](../../docs/concepts/fleets.md)

- 4. Join [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"}

+ 4. Join [Discord](https://discord.gg/u8SmfwPpMd)

diff --git a/docs/blog/posts/docker-inside-containers.md b/docs/blog/posts/docker-inside-containers.md

index 13af39030..699e75fe7 100644

--- a/docs/blog/posts/docker-inside-containers.md

+++ b/docs/blog/posts/docker-inside-containers.md

@@ -94,12 +94,12 @@ Last but not least, you can, of course, use the `docker run` command, for exampl

## Examples

-A few examples of using this feature can be found in [`examples/misc/docker-compose` :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/blob/master/examples/misc/docker-compose){: target="_ blank"}.

+A few examples of using this feature can be found in [`examples/misc/docker-compose`](https://github.com/dstackai/dstack/blob/master/examples/misc/docker-compose).

## Feedback

If you find something not working as intended, please be sure to report it to

-our [bug tracker :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/issues){:target="_ blank"}.

+our [bug tracker](https://github.com/dstackai/dstack/issues){:target="_ blank"}.

Your feedback and feature requests are also very welcome on both

-[Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"} and the

-[issue tracker :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/issues){:target="_blank"}.

+[Discord](https://discord.gg/u8SmfwPpMd) and the

+[issue tracker](https://github.com/dstackai/dstack/issues).

diff --git a/docs/blog/posts/dstack-metrics.md b/docs/blog/posts/dstack-metrics.md

index f4647d782..4558cd0cc 100644

--- a/docs/blog/posts/dstack-metrics.md

+++ b/docs/blog/posts/dstack-metrics.md

@@ -63,7 +63,7 @@ Monitoring is a critical part of observability, and we have many more features o

## Feedback

If you find something not working as intended, please be sure to report it to

-our [bug tracker :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/issues){:target="_ blank"}.

+our [bug tracker](https://github.com/dstackai/dstack/issues){:target="_ blank"}.

Your feedback and feature requests are also very welcome on both

-[Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"} and the

-[issue tracker :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/issues){:target="_blank"}.

+[Discord](https://discord.gg/u8SmfwPpMd) and the

+[issue tracker](https://github.com/dstackai/dstack/issues).

diff --git a/docs/blog/posts/dstack-sky-own-cloud-accounts.md b/docs/blog/posts/dstack-sky-own-cloud-accounts.md

index 16b68867c..13c927a31 100644

--- a/docs/blog/posts/dstack-sky-own-cloud-accounts.md

+++ b/docs/blog/posts/dstack-sky-own-cloud-accounts.md

@@ -9,7 +9,7 @@ categories:

# dstack Sky now supports your own cloud accounts

-[dstack Sky :material-arrow-top-right-thin:{ .external }](https://sky.dstack.ai){:target="_blank"}

+[dstack Sky](https://sky.dstack.ai)

enables you to access GPUs from the global marketplace at the most

competitive rates. However, sometimes you may want to use your own cloud accounts.

With today's release, both options are now supported.

@@ -30,12 +30,12 @@ CUDO, RunPod, and Vast.ai.

Additionally, you can disable certain backends if you do not plan to use them.

Typically, if you prefer using your own cloud accounts, it's recommended that you use the

-[open-source version :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/){:target="_blank"} of `dstack`.

+[open-source version](https://github.com/dstackai/dstack/) of `dstack`.

However, if you prefer not to host it yourself, now you can use `dstack Sky`

with your own cloud accounts as well.

> Seeking the cheapest on-demand and spot cloud GPUs?

-> [dstack Sky :material-arrow-top-right-thin:{ .external }](https://sky.dstack.ai){:target="_blank"} has you covered!

+> [dstack Sky](https://sky.dstack.ai) has you covered!

Need help, have a question, or just want to stay updated?

diff --git a/docs/blog/posts/ea-gtc25.md b/docs/blog/posts/ea-gtc25.md

index 262554866..b7a28ba59 100644

--- a/docs/blog/posts/ea-gtc25.md

+++ b/docs/blog/posts/ea-gtc25.md

@@ -12,7 +12,7 @@ links:

# How EA uses dstack to fast-track AI development

-At NVIDIA GTC 2025, Electronic Arts [shared :material-arrow-top-right-thin:{ .external }](https://www.nvidia.com/en-us/on-demand/session/gtc25-s73667/){:target="_blank"} how they’re scaling AI development and managing infrastructure across teams. They highlighted using tools like `dstack` to provision GPUs quickly, flexibly, and cost-efficiently. This case study summarizes key insights from their talk.

+At NVIDIA GTC 2025, Electronic Arts [shared](https://www.nvidia.com/en-us/on-demand/session/gtc25-s73667/) how they’re scaling AI development and managing infrastructure across teams. They highlighted using tools like `dstack` to provision GPUs quickly, flexibly, and cost-efficiently. This case study summarizes key insights from their talk.

@@ -80,7 +80,7 @@ Workflows became standardized, reproducible, and easier to trace—thanks to the

By adopting tools that are cloud-agnostic and developer-friendly, EA has reduced friction—from provisioning GPUs to deploying models—and enabled teams to spend more time on actual ML work.

-*Huge thanks to Kris and Keng from EA’s central tech team for sharing these insights. For more details, including the recording and slides, check out the full talk on the [NVIDIA GTC website :material-arrow-top-right-thin:{ .external }](https://www.nvidia.com/en-us/on-demand/session/gtc25-s73667/){:target="_blank"}.*

+*Huge thanks to Kris and Keng from EA’s central tech team for sharing these insights. For more details, including the recording and slides, check out the full talk on the [NVIDIA GTC website](https://www.nvidia.com/en-us/on-demand/session/gtc25-s73667/).*

!!! info "What's next?"

1. Check [dev environments](../../docs/concepts/dev-environments.md), [tasks](../../docs/concepts/tasks.md), [services](../../docs/concepts/services.md), and [fleets](../../docs/concepts/fleets.md)

diff --git a/docs/blog/posts/gh200-on-lambda.md b/docs/blog/posts/gh200-on-lambda.md

index e7831a76c..1a87dc90e 100644

--- a/docs/blog/posts/gh200-on-lambda.md

+++ b/docs/blog/posts/gh200-on-lambda.md

@@ -42,7 +42,7 @@ The GH200 Superchip’s 450 GB/s bidirectional bandwidth enables KV cache offloa

## GH200 on Lambda

-[Lambda :material-arrow-top-right-thin:{ .external }](https://cloud.lambda.ai/sign-up?_gl=1*1qovk06*_gcl_au*MTg2MDc3OTAyOS4xNzQyOTA3Nzc0LjE3NDkwNTYzNTYuMTc0NTQxOTE2MS4xNzQ1NDE5MTYw*_ga*MTE2NDM5MzI0My4xNzQyOTA3Nzc0*_ga_43EZT1FM6Q*czE3NDY3MTczOTYkbzM0JGcxJHQxNzQ2NzE4MDU2JGo1NyRsMCRoMTU0Mzg1NTU1OQ..){:target="_blank"} provides secure, user-friendly, reliable, and affordable cloud GPUs. Since end of last year, Lambda started to offer on-demand GH200 instances through their public cloud. Furthermore, they offer these instances at the promotional price of $1.49 per hour until June 30th 2025.

+[Lambda](https://cloud.lambda.ai/sign-up?_gl=1*1qovk06*_gcl_au*MTg2MDc3OTAyOS4xNzQyOTA3Nzc0LjE3NDkwNTYzNTYuMTc0NTQxOTE2MS4xNzQ1NDE5MTYw*_ga*MTE2NDM5MzI0My4xNzQyOTA3Nzc0*_ga_43EZT1FM6Q*czE3NDY3MTczOTYkbzM0JGcxJHQxNzQ2NzE4MDU2JGo1NyRsMCRoMTU0Mzg1NTU1OQ..) provides secure, user-friendly, reliable, and affordable cloud GPUs. Since end of last year, Lambda started to offer on-demand GH200 instances through their public cloud. Furthermore, they offer these instances at the promotional price of $1.49 per hour until June 30th 2025.

With the latest `dstack` update, it’s now possible to use these instances with your Lambda account whether you’re running a dev environment, task, or service:

@@ -81,7 +81,7 @@ $ dstack apply -f .dstack.yml

> If you have GH200 or GB200-powered hosts already provisioned via Lambda, another cloud provider, or on-prem, you can now use them with [SSH fleets](../../docs/concepts/fleets.md#ssh-fleets).

!!! info "What's next?"

- 1. Sign up with [Lambda :material-arrow-top-right-thin:{ .external }](https://cloud.lambda.ai/sign-up?_gl=1*1qovk06*_gcl_au*MTg2MDc3OTAyOS4xNzQyOTA3Nzc0LjE3NDkwNTYzNTYuMTc0NTQxOTE2MS4xNzQ1NDE5MTYw*_ga*MTE2NDM5MzI0My4xNzQyOTA3Nzc0*_ga_43EZT1FM6Q*czE3NDY3MTczOTYkbzM0JGcxJHQxNzQ2NzE4MDU2JGo1NyRsMCRoMTU0Mzg1NTU1OQ..){:target="_blank"}

+ 1. Sign up with [Lambda](https://cloud.lambda.ai/sign-up?_gl=1*1qovk06*_gcl_au*MTg2MDc3OTAyOS4xNzQyOTA3Nzc0LjE3NDkwNTYzNTYuMTc0NTQxOTE2MS4xNzQ1NDE5MTYw*_ga*MTE2NDM5MzI0My4xNzQyOTA3Nzc0*_ga_43EZT1FM6Q*czE3NDY3MTczOTYkbzM0JGcxJHQxNzQ2NzE4MDU2JGo1NyRsMCRoMTU0Mzg1NTU1OQ..)

2. Set up the [Lambda](../../docs/concepts/backends.md#lambda) backend

3. Follow [Quickstart](../../docs/quickstart.md)

4. Check [dev environments](../../docs/concepts/dev-environments.md), [tasks](../../docs/concepts/tasks.md), [services](../../docs/concepts/services.md), and [fleets](../../docs/concepts/fleets.md)

diff --git a/docs/blog/posts/gpu-blocks-and-proxy-jump.md b/docs/blog/posts/gpu-blocks-and-proxy-jump.md

index cbf9ab7dc..61f28ea81 100644

--- a/docs/blog/posts/gpu-blocks-and-proxy-jump.md

+++ b/docs/blog/posts/gpu-blocks-and-proxy-jump.md

@@ -39,7 +39,7 @@ enables optimal hardware utilization by allowing concurrent workloads to run on

available resources on each host.

> For example, imagine you’ve reserved a cluster with multiple bare-metal nodes, each equipped with 8x MI300X GPUs from

-[Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"}.

+[Hot Aisle](https://hotaisle.xyz/).

With `dstack`, you can define your fleet configuration like this:

@@ -108,7 +108,7 @@ The latest `dstack` release introduces the [`proxy_jump`](../../docs/concepts/fl

through a login node.

> For example, imagine you’ve reserved a 1-Click Cluster from

-> [Lambda :material-arrow-top-right-thin:{ .external }](https://lambdalabs.com/){:target="_blank"} with multiple nodes, each equipped with 8x H100 GPUs from.

+> [Lambda](https://lambdalabs.com/) with multiple nodes, each equipped with 8x H100 GPUs from.

With `dstack`, you can define your fleet configuration like this:

@@ -174,8 +174,8 @@ an AI-native experience, simplicity, and vendor-agnostic orchestration for both

!!! info "Roadmap"

We plan to further enhance `dstack`'s support for both cloud and on-premises setups. For more details on our roadmap,

- refer to our [GitHub :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/issues/2184){:target="_blank"}.

+ refer to our [GitHub](https://github.com/dstackai/dstack/issues/2184).

> Have questions? You're welcome to join

-> our [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"} or talk

-> directly to [our team :material-arrow-top-right-thin:{ .external }](https://calendly.com/dstackai/discovery-call){:target="_blank"}.

+> our [Discord](https://discord.gg/u8SmfwPpMd) or talk

+> directly to [our team](https://calendly.com/dstackai/discovery-call).

diff --git a/docs/blog/posts/gpu-health-checks.md b/docs/blog/posts/gpu-health-checks.md

index 1571935b6..cc28bb96a 100644

--- a/docs/blog/posts/gpu-health-checks.md

+++ b/docs/blog/posts/gpu-health-checks.md

@@ -64,10 +64,10 @@ Passive GPU health checks work on AWS (except with custom `os_images`), Azure (e

This update is about visibility: giving engineers real-time insight into GPU health before jobs run. Next comes automation — policies to skip GPUs with warnings, and self-healing workflows that replace unhealthy instances without manual steps.

If you have experience with GPU reliability or ideas for automated recovery, join the conversation on

-[Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"}.

+[Discord](https://discord.gg/u8SmfwPpMd).

!!! info "What's next?"

1. Check [Quickstart](../../docs/quickstart.md)

2. Explore the [clusters](../../docs/guides/clusters.md) guide

3. Learn more about [metrics](../../docs/guides/metrics.md)

- 4. Join [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"}

+ 4. Join [Discord](https://discord.gg/u8SmfwPpMd)

diff --git a/docs/blog/posts/h100-mi300x-inference-benchmark.md b/docs/blog/posts/h100-mi300x-inference-benchmark.md

index 350795684..2209393d1 100644

--- a/docs/blog/posts/h100-mi300x-inference-benchmark.md

+++ b/docs/blog/posts/h100-mi300x-inference-benchmark.md

@@ -22,8 +22,8 @@ Finally, we extrapolate performance projections for upcoming GPUs like NVIDIA H2

@@ -80,7 +80,7 @@ Workflows became standardized, reproducible, and easier to trace—thanks to the

By adopting tools that are cloud-agnostic and developer-friendly, EA has reduced friction—from provisioning GPUs to deploying models—and enabled teams to spend more time on actual ML work.

-*Huge thanks to Kris and Keng from EA’s central tech team for sharing these insights. For more details, including the recording and slides, check out the full talk on the [NVIDIA GTC website :material-arrow-top-right-thin:{ .external }](https://www.nvidia.com/en-us/on-demand/session/gtc25-s73667/){:target="_blank"}.*

+*Huge thanks to Kris and Keng from EA’s central tech team for sharing these insights. For more details, including the recording and slides, check out the full talk on the [NVIDIA GTC website](https://www.nvidia.com/en-us/on-demand/session/gtc25-s73667/).*

!!! info "What's next?"

1. Check [dev environments](../../docs/concepts/dev-environments.md), [tasks](../../docs/concepts/tasks.md), [services](../../docs/concepts/services.md), and [fleets](../../docs/concepts/fleets.md)

diff --git a/docs/blog/posts/gh200-on-lambda.md b/docs/blog/posts/gh200-on-lambda.md

index e7831a76c..1a87dc90e 100644

--- a/docs/blog/posts/gh200-on-lambda.md

+++ b/docs/blog/posts/gh200-on-lambda.md

@@ -42,7 +42,7 @@ The GH200 Superchip’s 450 GB/s bidirectional bandwidth enables KV cache offloa

## GH200 on Lambda

-[Lambda :material-arrow-top-right-thin:{ .external }](https://cloud.lambda.ai/sign-up?_gl=1*1qovk06*_gcl_au*MTg2MDc3OTAyOS4xNzQyOTA3Nzc0LjE3NDkwNTYzNTYuMTc0NTQxOTE2MS4xNzQ1NDE5MTYw*_ga*MTE2NDM5MzI0My4xNzQyOTA3Nzc0*_ga_43EZT1FM6Q*czE3NDY3MTczOTYkbzM0JGcxJHQxNzQ2NzE4MDU2JGo1NyRsMCRoMTU0Mzg1NTU1OQ..){:target="_blank"} provides secure, user-friendly, reliable, and affordable cloud GPUs. Since end of last year, Lambda started to offer on-demand GH200 instances through their public cloud. Furthermore, they offer these instances at the promotional price of $1.49 per hour until June 30th 2025.

+[Lambda](https://cloud.lambda.ai/sign-up?_gl=1*1qovk06*_gcl_au*MTg2MDc3OTAyOS4xNzQyOTA3Nzc0LjE3NDkwNTYzNTYuMTc0NTQxOTE2MS4xNzQ1NDE5MTYw*_ga*MTE2NDM5MzI0My4xNzQyOTA3Nzc0*_ga_43EZT1FM6Q*czE3NDY3MTczOTYkbzM0JGcxJHQxNzQ2NzE4MDU2JGo1NyRsMCRoMTU0Mzg1NTU1OQ..) provides secure, user-friendly, reliable, and affordable cloud GPUs. Since end of last year, Lambda started to offer on-demand GH200 instances through their public cloud. Furthermore, they offer these instances at the promotional price of $1.49 per hour until June 30th 2025.

With the latest `dstack` update, it’s now possible to use these instances with your Lambda account whether you’re running a dev environment, task, or service:

@@ -81,7 +81,7 @@ $ dstack apply -f .dstack.yml

> If you have GH200 or GB200-powered hosts already provisioned via Lambda, another cloud provider, or on-prem, you can now use them with [SSH fleets](../../docs/concepts/fleets.md#ssh-fleets).

!!! info "What's next?"

- 1. Sign up with [Lambda :material-arrow-top-right-thin:{ .external }](https://cloud.lambda.ai/sign-up?_gl=1*1qovk06*_gcl_au*MTg2MDc3OTAyOS4xNzQyOTA3Nzc0LjE3NDkwNTYzNTYuMTc0NTQxOTE2MS4xNzQ1NDE5MTYw*_ga*MTE2NDM5MzI0My4xNzQyOTA3Nzc0*_ga_43EZT1FM6Q*czE3NDY3MTczOTYkbzM0JGcxJHQxNzQ2NzE4MDU2JGo1NyRsMCRoMTU0Mzg1NTU1OQ..){:target="_blank"}

+ 1. Sign up with [Lambda](https://cloud.lambda.ai/sign-up?_gl=1*1qovk06*_gcl_au*MTg2MDc3OTAyOS4xNzQyOTA3Nzc0LjE3NDkwNTYzNTYuMTc0NTQxOTE2MS4xNzQ1NDE5MTYw*_ga*MTE2NDM5MzI0My4xNzQyOTA3Nzc0*_ga_43EZT1FM6Q*czE3NDY3MTczOTYkbzM0JGcxJHQxNzQ2NzE4MDU2JGo1NyRsMCRoMTU0Mzg1NTU1OQ..)

2. Set up the [Lambda](../../docs/concepts/backends.md#lambda) backend

3. Follow [Quickstart](../../docs/quickstart.md)

4. Check [dev environments](../../docs/concepts/dev-environments.md), [tasks](../../docs/concepts/tasks.md), [services](../../docs/concepts/services.md), and [fleets](../../docs/concepts/fleets.md)

diff --git a/docs/blog/posts/gpu-blocks-and-proxy-jump.md b/docs/blog/posts/gpu-blocks-and-proxy-jump.md

index cbf9ab7dc..61f28ea81 100644

--- a/docs/blog/posts/gpu-blocks-and-proxy-jump.md

+++ b/docs/blog/posts/gpu-blocks-and-proxy-jump.md

@@ -39,7 +39,7 @@ enables optimal hardware utilization by allowing concurrent workloads to run on

available resources on each host.

> For example, imagine you’ve reserved a cluster with multiple bare-metal nodes, each equipped with 8x MI300X GPUs from

-[Hot Aisle :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/){:target="_blank"}.

+[Hot Aisle](https://hotaisle.xyz/).

With `dstack`, you can define your fleet configuration like this:

@@ -108,7 +108,7 @@ The latest `dstack` release introduces the [`proxy_jump`](../../docs/concepts/fl

through a login node.

> For example, imagine you’ve reserved a 1-Click Cluster from

-> [Lambda :material-arrow-top-right-thin:{ .external }](https://lambdalabs.com/){:target="_blank"} with multiple nodes, each equipped with 8x H100 GPUs from.

+> [Lambda](https://lambdalabs.com/) with multiple nodes, each equipped with 8x H100 GPUs from.

With `dstack`, you can define your fleet configuration like this:

@@ -174,8 +174,8 @@ an AI-native experience, simplicity, and vendor-agnostic orchestration for both

!!! info "Roadmap"

We plan to further enhance `dstack`'s support for both cloud and on-premises setups. For more details on our roadmap,

- refer to our [GitHub :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/issues/2184){:target="_blank"}.

+ refer to our [GitHub](https://github.com/dstackai/dstack/issues/2184).

> Have questions? You're welcome to join

-> our [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"} or talk

-> directly to [our team :material-arrow-top-right-thin:{ .external }](https://calendly.com/dstackai/discovery-call){:target="_blank"}.

+> our [Discord](https://discord.gg/u8SmfwPpMd) or talk

+> directly to [our team](https://calendly.com/dstackai/discovery-call).

diff --git a/docs/blog/posts/gpu-health-checks.md b/docs/blog/posts/gpu-health-checks.md

index 1571935b6..cc28bb96a 100644

--- a/docs/blog/posts/gpu-health-checks.md

+++ b/docs/blog/posts/gpu-health-checks.md

@@ -64,10 +64,10 @@ Passive GPU health checks work on AWS (except with custom `os_images`), Azure (e

This update is about visibility: giving engineers real-time insight into GPU health before jobs run. Next comes automation — policies to skip GPUs with warnings, and self-healing workflows that replace unhealthy instances without manual steps.

If you have experience with GPU reliability or ideas for automated recovery, join the conversation on

-[Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"}.

+[Discord](https://discord.gg/u8SmfwPpMd).

!!! info "What's next?"

1. Check [Quickstart](../../docs/quickstart.md)

2. Explore the [clusters](../../docs/guides/clusters.md) guide

3. Learn more about [metrics](../../docs/guides/metrics.md)

- 4. Join [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"}

+ 4. Join [Discord](https://discord.gg/u8SmfwPpMd)

diff --git a/docs/blog/posts/h100-mi300x-inference-benchmark.md b/docs/blog/posts/h100-mi300x-inference-benchmark.md